2024 MMVRAC Winners

![]()

![]()

Check out the leaderboard posted on 15 July 2024 under MMVRAC Workshop

ICME 2024

Jun LIU

Singapore University of Technology and Design

Bingquan SHEN

DSO National Laboratories and National University of Singapore

Ping HU

Boston University

Kian Eng ONG

Singapore University of Technology and Design

Haoxuan QU

Singapore University of Technology and Design

Duo PENG

Singapore University of Technology and Design

Lanyun ZHU

Singapore University of Technology and Design

Lin Geng FOO

Singapore University of Technology and Design

Xun Long NG

Singapore University of Technology and Design

We thank all participants for their effort and time.

MMVRAC Workshop

2pm to 5pm, Hannepin Ballroom A & B 1F

- Keynote Address: Physics-driven AI for multi-modal imaging and restoration by Prof. Bihan Wen, Nanyang Assistant Professor, Nanyang Technological University

- Certificate presentation to winners, followed by oral presentation by 1st place winner of each track

Download Certificate of Achievement for Winner of each track - Poster presentation and Networking session

Download posters

by Prof. Bihan Wen, Nanyang Assistant Professor, Nanyang Technological University

Machine learning techniques have received increasing attention in recent years for solving various imaging problems, creating impacts in important applications, such as remote sensing, medical imaging, smart manufacture, etc. This talk will first review some recent advances in machine learning from modeling, algorithmic, and mathematical perspectives, for imaging and reconstruction tasks. In particular, he will share some of their recent works on the physics-driven deep learning and how it can be applied to the imaging applications for different modalities. He will show how the underlying image model evolves from signal processing to building deep neural networks, while the "old" ways can also join the new. It is critical to incorporate both the flexibility of deep learning and the underlying imaging physics to achieve the state-of-the-art results.

Certificate of Participation

Download Certificate of Participation

Participants who have successfully completed the Challenge (submitted code, models etc) should have received their Certificate of Participation through email.

The Winning Teams of each track will be presented with their Winner's Certificate at the ICME workshop.Submission Guidelines

Code Submission Terms and Conditions

Participants are to adhere to the following terms and conditions. Failure to do so will result in disqualification from the Challenge.

- Participants can utilize one or more modalities provided in the dataset for the task.

- Participants can also use other datasets or pre-trained models etc.

- The model should not be trained on any data from the test set.

- Participants are required to submit their results the link to their training data, code, and model repositories by the Challenge submission deadline.

- The codes need to be open-source and the same results can be reproduced by others. Hence, the submission should include:

- list of Python libraries / dependencies for the codes

- pre-training / training data (if different from what we provided) and pre-processing or pre-training codes

- training and inference codes

- trained model

- documentation / instruction / other relevant information to ensure the seamless execution of the codes (e.g., easy means to read in test data directly by simply replacing the test data directory DIR_DATA_TEST)

- Participants can upload their project (codes and models) to GitHub, Google Drive or any other online storage providers.

Paper Submission

- We will contact the top teams of each track to submit the paper.

- The paper submission will follow the ICME 2024 guidelines (https://2024.ieeeicme.org/author-information-and-submission-instructions)

- Length: Papers must be no longer than 6 pages, including all text, figures, and references. Submissions may be accompanied by up to 20 MB of supplemental material following the same guidelines as regular and special session papers.

- Format: Workshop papers have the same format as regular papers.

Challenge Details

Given the enormous amount of multi-modal multi-media information that we encounter in our daily lives (including visuals, sounds, texts, and interactions with their surroundings), we humans process such information with great ease – we understand and analyze, think rationally and reason logically, and then predict and make sound judgments and informed decision based on various modalities of information available.

For machines to assist us in holistic understanding and analysis of events, or even to achieve such sophistication of human intelligence (e.g., Artificial General Intelligence (AGI) or even Artificial Super Intelligence (ASI)), they need to process visual information from real-world videos, alongside complementary audio and textual data, about the events, scenes, objects, their attributes, actions, and interactions.

Hence, we hope to further advance such developments in multi-modal video reasoning and analyzing for different scenarios and real-world applications through this Grand Challenge using various challenging multi-modal datasets with different types of computer vision tasks (i.e., video grounding, spatiotemporal event grounding, video question answering, sound source localization, person reidentification, attribute recognition, pose estimation, skeleton-based action recognition, spatiotemporal action localization, behavioral graph analysis, animal pose estimation and action recognition) and multiple types of annotations (i.e., audio, visual, text). This Grand Challenge will culminate in the 2nd Workshop on Multi-Modal Video Reasoning and Analyzing Competition (MMVRAC).

The Challenge Tracks are based on the following video datasets:

Chaotic World (https://github.com/sutdcv/Chaotic-World)

This challenging multi-modal (video, audio, and text) video dataset that focuses on chaotic situations around the world comprises complex and dynamic scenes with severe occlusions and contains over 200,000 annotated instances for various tasks such as spatiotemporal action localization (i.e., spatially and temporally locate the action), behavioral graph analysis (i.e., analyze interactions between people), spatiotemporal event grounding (i.e., identifying relevant segments in long videos and localizing people, scene, and behavior-of-interest), and sound source localization (i.e., spatially locate the source of sound). This will promote deeper research into more robust models that capitalize various modalities and handle such complex human behaviors / interactions in dynamic and complex environments.

Track: Spatiotemporal Action Localization

Track: Sound Source Localization

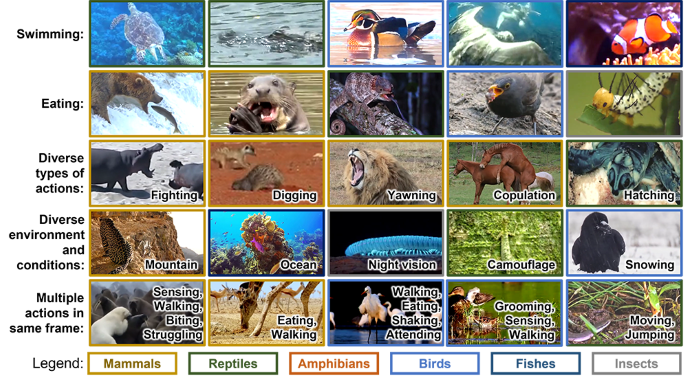

Animal Kingdom (https://github.com/sutdcv/Animal-Kingdom)

This animal behavioral analysis dataset comprises 50 hours of long videos with corresponding text descriptions of the scene, animal, and behaviors to localize the relevant temporal segments in long videos for video grounding (i.e., identifying relevant segments in long videos), 50 hours of annotated video sequences for animal action recognition (i.e. identifying the action of the animal), and 33,000 frames for animal pose estimation (i.e., predicting the keypoints of the animal) for a diversity of over 850 species. This will facilitate animal behavior analysis, which is especially important for wildlife surveillance and conservation.

Track: Video Grounding

Track: Animal Action Recognition

Track: Animal Pose Estimation for Protocol 1 (All animals)

UAV-Human (https://github.com/sutdcv/UAV-Human)

This human behavior analysis dataset contains more than 60,000 video samples (bird-eye views of people and actions taken from a UAV) for pose estimation (i.e., predicting the keypoints of the individual), skeleton-based action recognition (i.e. identifying the action of the individual based on keypoints), attribute recognition (i.e. identifying the features / characteristics of the individual), as well as person reidentification (i.e. matching a person's identity across various videos) tasks. This will facilitate human behavior analysis from different vantage points, and will be useful for the development and applications of UAVs.

Track: Skeleton-based Action Recognition

Track: Attribute Recognition

Track: Person Reidentification

Participants are required to register their interest to participate in the Challenge.

The Grand Challenge is open to individuals from institutions of higher education, research institutes, enterprises, or other organizations.

- Each team can consist of a maximum of 6 members. The team can comprise individuals from different institutions or organizations.

- Participants can also participate as individuals.

- Participants will take on any one of the tracks or more than one track in the Challenge Tracks.

- All participants who successfully complete the Challenge by the Challenge deadline and adhere to the Submission Guidelines will receive a Certificate of Participation.

-

The top three teams of each track are required to submit a paper and present at the MMVRAC workshop (oral or poster session).

- They will be notified by the Organizers and are required to submit their paper for review by 06 April 2024.

- They will need to submit their camera-ready paper, register for the conference by 01 May 2024, and present at the conference; otherwise they their paper will not be included in IEEE Xplore.

- A Grand Challenge paper is covered by a full-conference registration only.

- They will receive the Winners' Certificate and their work (link to their training data, code, and model repositories) will be featured on this website.

Evaluation Metric and Judging Criteria

The evaluation metric of each task follows the metric indicated in the original paper.

- In cases of task whereby there are sub-tasks, the sub-task is specified in the Challenge track.

- In cases whereby there are a few evaluation metrics for the task, the combination of all metrics will be taken into consideration to evaluate the robustness of the model.

- In cases whereby there are multiple submissions for the same task, only the last submission will be considered.

- In the event of same results for the same task, the team with the earlier upload date and time will be considered as the winner.

01 May 2024

Deadline for camera-ready submission of accepted paper

01 May 2024

Author Full Conference Registration